A/B testing: lessons learned from the Liftshare Tech Team

This website uses cookies to ensure you get the best experience on our website. cookie information

This year our Tech Team have increased the number of experiments that they run on Liftshare.com, implementing dozens of them, often as multi-variant A/B tests. On this blog I share on some of the lessons learnt from our Chief Product Officer, Sergio.

In case you’re not familiar with the term, according to Optimizely, A/B testing (also known as split testing) “is a method of comparing two versions of a webpage or app against each other to determine which one performs better. A/B testing is essentially an experiment where two or more variants of a page are shown to users at random, and statistical analysis is used to determine which variation performs better for a given conversion goal”.

1/ Define the problem before jumping to solutions. It’s easy to fall in the trap of thinking of a solution to a problem right away, without paying enough attention to the problem itself. For instance, if we wanted to improve the response rate of our members to messages they’ve received through the platform (problem definition), we might find a range of solutions looking into different areas (implementing push notifications on our apps, enhancing the emails that are triggered with each message, creating a ‘notifications centre’ that users can see when they log in, etc). A clear definition of the problem that we’re facing will allow us to test various solutions in a more systematic way, without committing to any of them before we’ve measured their impact.

Organising periodic brainstorming sessions in where the team focuses on various problems and comes up with ideas for experiments has worked well for us.

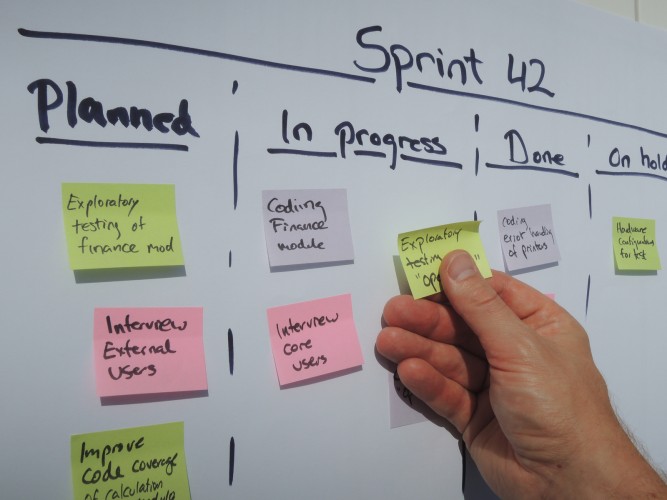

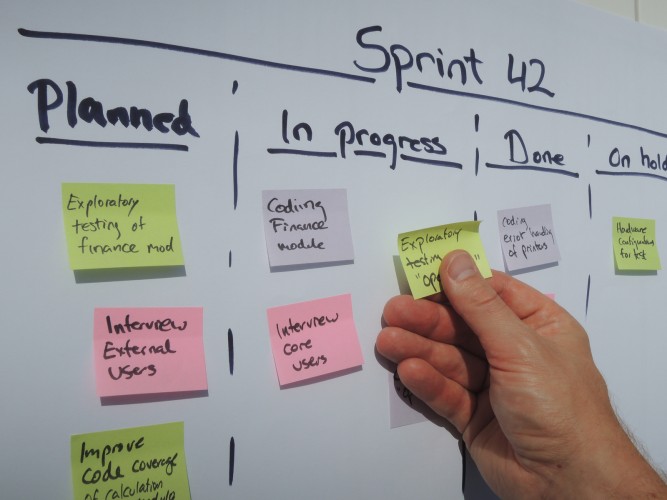

2/ Keep discipline. It’s easy to run a couple of A/B tests and then forget about them. At Liftshare, my team have a minimum target of experiments to be carried out in each quarter. This has forced us to integrate experiments as part of our work methodology (in this case, the agile methodology known as ‘Kanban’). The key thing is not so much running successful experiments, but the commitment to perform a certain number of experiments no matter what. If every time a team develops a new feature, this contains a variant A and a variant B, learnings around that functionality are simply going to be much greater. Of course, building stuff this way requires discipline and a change of mentality: it’s about delivering as fast as possible, yes, and equally about maximising learnings about user behaviour.

3/ Learn from inconclusive and failed experiments. Don’t go nuts if your experiments don’t increase conversion as you wished. In fact, as the guys from Optimizely point out, about 60% of experiments are inconclusive, whilst around 10% entail some degree of regression. In other words, only 30% of your experiments might lead to a higher conversion rate. This means that learning from the remaining 70% is absolutely important. In many cases, thanks to a failure we might have discovered something new about how our users behave, and that can take us to a winning experiment.

By way of example; when we tried about 4 variants of a button that drove traffic from the main Liftshare landing page to our ‘business solutions’ site, users reacted to the new design by clicking less or simply remaining indifferent towards one variant or the other (see screenshot below).

On a more positive note, when we simplified the ‘add a journey’ form, removing one of the three steps that it had, conversion went up by 20% (see image below).

4/ When do you have statistical significance? Some time ago we met Benjamin Mitchell, former product manager at BBC.com, which attracts about 1m visits a day. In the BBC’s case, in a matter of hours they were able to draw precise conclusions about a particular experiment. However, on webs with medium or low traffic, and depending on the business model, you might be able to come up with some solid conclusions with a few hundred hits rather than thousands. If you know your product and your market you’ll be able to make that call.

5/ Produce publicly testable information. Decision making processes often follow the well-known ‘most important person model’, whose opinion might or might not be based in numbers. Experiments give you the opportunity to refute myths and urban legends at your company (which at the end of the day could just be untested hypotheses), but only if you can share the results. If you can, find the right forums to communicate the value of your experiments.

6/ Track experiments on specific Kanban strands. Tracking experiments on a separate Kanban or project management tool (such us Jira or Trello) can make a lot of sense for some teams. It’s a way of acknowledging their importance and giving them visibility to everyone in the team. For example, at Liftshare we have a development Kanban board and an experiments Kanban board. The latter refers to each experiment and its status, while the former contains the underlying tasks that need to happen to actually run a given experiment, with no duplication between the two.

Even though it can be more time consuming, preparing a brief report for each experiment helps to cover all the basics to be considered before and after performing it; and what’s more, it allows you to go back to past experiments when you need to, making sure that the learnings have been properly recorded. As mentioned in point #3, extracting learnings from experiments is paramount; however, ensuring that we document learnings properly in an accessible way is arguably of equal importance.

7/ Use different data sources if needed. One single tracking tool might not be good enough to get to the full picture on a certain test (although, by all means, having clear KPIs at the start will make it much easier to identify the tracking that needs to be in place). We might have to use a range of tools, such as Google Analytics events, our own data base, cohorts and funnels etc, or even platforms like Usertesting.com or Amazon Mechanical Turk to see real users ‘in motion’. Tracking tools are not perfect, and not looking at the numbers from different angles could be missing a trick that would have ended being a winner.

8/ Ensure your system is flexible enough. When we first started introducing experiments into our workflow, we relied heavily on ‘hard-coded’ solutions to data tracking and all of our experiments relied on a full system deploy if anything needed to be altered, no matter how small. Over time we’ve introduced various tools, both custom and pre-built, which have proven to be vital to our experimentation process. Systems such as Google Tag Manager are perfect for allowing you to rapidly include specific tracking events without requiring a full re-deployment, and without these tools the number of experiments we are capable of would be much lower.

(Original article posted here).

Author Lex Barber

on

Car share for less with Liftshare, the UK’s biggest sharing economy site!

Join now!